Mankind’s struggle to convey meaning ‘twixt pen and mind has long been a problem for anyone wishing to communicate effectively. Optical Character Recognition (OCR) is a modern solution to this age-old challenge, brought into the present day need for machines to understand human-readable glyphs and characters.

How many times have you been frustrated that you cannot select the text in an image? How often would you find the image you are searching for if you could only type in a key word that you know the image contains, only to realize that your computer is not capable of “reading” the contents of that image? How much time have you wasted typing words that already exist in picture form?

I remember learning to type in the 80’s on a real, honest-to-goodness typewriter. I was using keyboards before I “learned to type”, but hadn’t yet formed good habits with the home row position. I had a stand that would hold a book, and my process was to type the characters written in the book to reproduce them on a physical sheet of paper. Mistakes were pretty bad, as there was real ink hitting the paper and an embossed depression made by the hammer striking the physical page.

Now I can point the supercomputer I keep in my pocket at any text in almost any language and copy an English translation as text in real time, standing in the middle of nowhere. How did technology come this far within our lifetime? The short answer is hard work.

Optical Character Recognition, or OCR, is the process of programmatically identifying characters visually and converting that to the best-guess equivalent computer code.

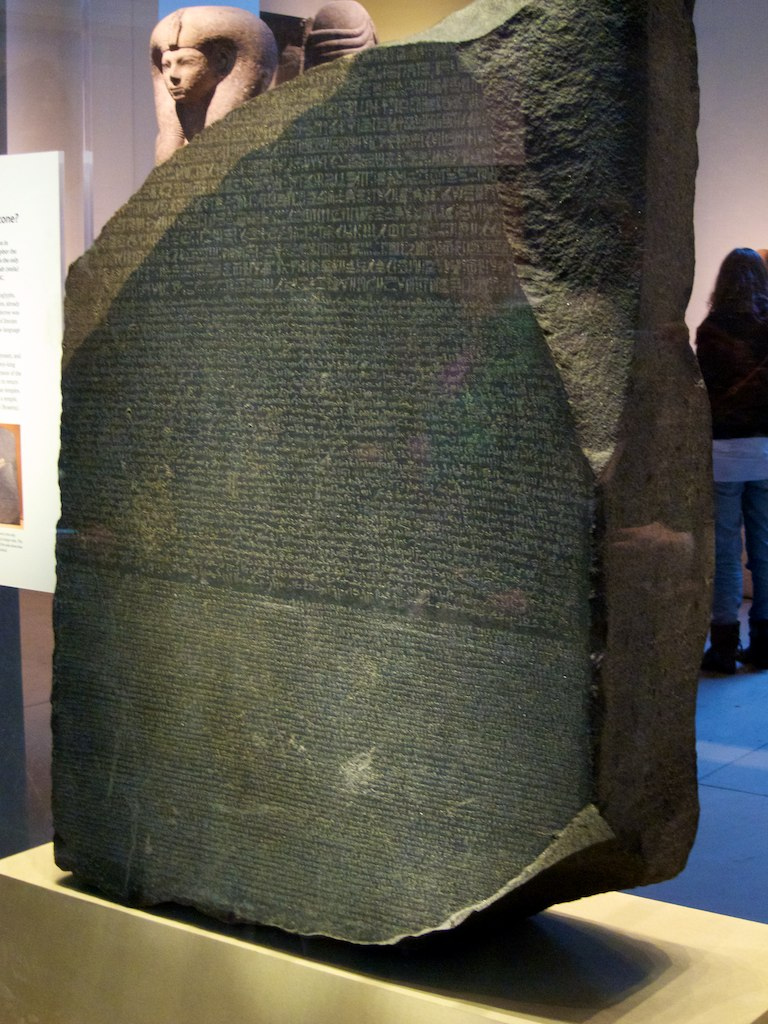

There are a LOT of languages on this planet. So many, in fact, that some of them have faded from memory and require quite a lot of detective work to decipher. Ancient Egyptian hieroglyphics were quite a mystery until the discovery of the Rosetta Stone. When we can see an example of something next to an example of something else, it is possible to compare those things. When we see, for example, Greek (a language we know) next to Ancient Egyptian (a language we lost), we can systematically draw conclusions. Surprisingly, that’s how OCR works its magic.

Computers can’t actually read anything. What they can do is draw extremely accurate comparisons between examples of text and the patterns present in an image. Let’s think through what it would take to write an OCR program from scratch. Take a close look at the lowercase letter A here:

a

Pretty simple, right? There’s a kind of circle with a little tail, and a hook that rises out of the top. If we map that out on a grid, it might look something like this:

00111000 00000100 01111100 00100100 00111100 00000010

I just typed 8 zeros on a line, then made a grid by pasting it into several rows. Then I replaced zeros with 1’s everywhere you’d find ink if you drew that shape in that box. We could say that any image that results in an exact match with this pattern is a picture of the lowercase letter “a”.

I’m already seeing a problem here, though. My lowercase a is super blocky, which is not likely to be a perfect match with anything other than an 8-bit font. I could increase the number of data points so it can smooth out into a nice, high-resolution pattern. I could add patterns to match various fonts, and even handwriting. There’s a lot to do, just to get one single letter to match. When we think we’re done, all of sudden we realize we’re matching more than we intended…

We could keep refining our process, adding more and more layers of complexity to cover more and more use cases, but that’s been a joint effort by many brilliant minds over many years already. Instead of reinventing that wheel, we can continue adding value by building our business rules and logic on top of all of that hard work.

Standing on the Shoulders of Giants

The first OCR tools in modern history were developed in the early-to-mid 1900’s, but the ideas were brewing for years before that. The Nipkow Disk was an image scanning device all the way back in 1885. Later devices started by interpreting Morse Code to read text aloud. The first scanners capable of reading text required that the text be in a special font that was easy for the scanning software to recognize. In 1974, Kurzweil Computer Products developed the first omni-font software.

Much of the first work in this space was in English, using the Latin alphabet. In 1992, the Cyrillic alphabet got some love and Russian text could be digitized.

I remember buying scanners in the late 90’s based in part on what OCR software was included with the purchase. The reviews were important, too, as accuracy and efficiency is a huge factor.

By the year 2000 we had online OCR services that could translate scanned text into other languages, and this is when OCR really picked up some steam.

By moving the processing from the scanner (or the computer it was attached to) up into the cloud allowed OCR to shift from being a product to becoming a service. We’re still able to build value on top of these services in several critical ways:

Open Source: Decentralized Collaboration

Software that is shared openly across the industry allows everyone to pitch in together on improvements and innovations. OCR software used to be competitive and closed. This led to uncertain results between various vendors and wasn’t a very efficient way for society to advance this technology.

SaaS: Centralized Collaboration

When companies that work on OCR software provide it as a service, they have a relationship with their customers. Rather than creating a piece of software, selling it and hoping the customer is happy, SaaS providers engage with their customers to continuously improve the quality, flexibility and functionality of the process.

Machine Learning

Instead of humans writing increasingly complex use cases to identify letters in different fonts, different languages and their scripts, as well as different degrees of image quality, we can guide Machine Learning by providing samples of images with text and teaching them to draw their own conclusions automatically through ML “Models”.

Why this all matters (a lot)

Converting from one form of communication to another is time consuming. Time is valuable, so the more we can reduce the time necessary to perform what should be mindless, routine processing, the better society fares as a whole. For you as an individual, it means convenience and productivity in your personal life. For your business, it means real savings to your bottom line. Partnering with a SaaS provider means you don’t even need to spend your time getting set up to save that time; just establish your requirements, and boom—you’re up and running. This is an essential strategy for companies increasingly challenged by the rise of Big Content. There’s just no humanly-sustainable way to handle the Big Content problem without automation. Machine Learning helps us to scale the accuracy and flexibility of OCR, and automation through business-configured workflows makes sure that this flood of data we’re struggling to keep up with gets handled gracefully, consistently, and reliably by tools that work for us.

The Struggle of Good vs Evil

‘Member CAPTCHAs? Some tricky image of text required you to squint at your screen while guessing what is written, then type that guess into a text box to “prove” that you’re a human. Why does this matter? Well, for some reason there’s a very active, very busy segment of society that believes that pushy, mindless postings of advertisements will earn them a lot of money. Unfortunately, they’re right. Statistically, humans are pretty gullible. Worse yet, computers are still a pretty new way to communicate. The more consumers become familiar with scams, spam, and outright lies, the more this kind of behavior will require increasingly intelligent attacks to be successful. There will always be a struggle between machines making our lives easier and bad actors trying to take advantage of that, but lots of fun and exciting changes are emerging to manage these challenges.

David Liedle is Filestack’s Chief Evangelist. He works remotely in New York City with his wife and son, and their kitty. See more at https://DavidCanHelp.me/

Read More →