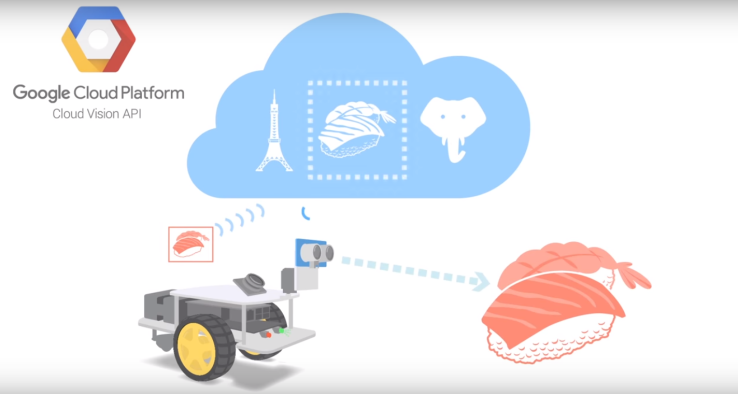

A few weeks ago, we published our thoughts and research into the major image-labeling service providers, including Microsoft Cognitive Services, Amazon Rekognition, Google Vision, and Clarifai. While we believed each had its merits and all returned accurate labels for most inputs, a combination of speed, content-detection and cost led us to choose Google Vision as the engine for our Image Tagging API.

A decision we are, in large part, happy with. The service works almost as expected (more on that in a second), and after upgrading to the newest Python cloud library , we are no longer forced to send raw image bytes or a Google storage link in our request, but have the option of simply providing an external URL. This eliminates a once-glaring disadvantage Google Vision had to Clarifai, and significantly improves usability and computational efficiency on our end.

A decision we are, in large part, happy with. The service works almost as expected (more on that in a second), and after upgrading to the newest Python cloud library , we are no longer forced to send raw image bytes or a Google storage link in our request, but have the option of simply providing an external URL. This eliminates a once-glaring disadvantage Google Vision had to Clarifai, and significantly improves usability and computational efficiency on our end.

That is not to say the experience has been perfect, and considering how new this entire market is, that should come as no surprise. While Google Vision passed all of our initial tests, user reports of random images returning errors started filtering in. After solving for a few load-balancing issues of our own, we found that, occasionally, specific images (no matter how they were processed) would return an empty response dictionary in the Python library. Obviously, returning an error message would be one thing, or a 400 status code, but this was merely an empty dictionary, with nary a clue as to what went wrong.

Investigating the Bug

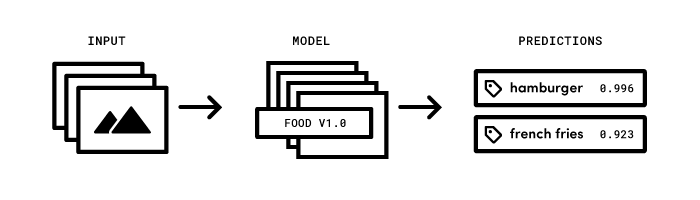

Google has been actively working with us to solve this problem, but despite our being able to reproduce it on two separate versions of their library and with many different tests, they have yet to figure out the cause. This led us to do more rigorous testing between Google Vision and Clarifai, the two front-runners as far as we were concerned, to see if it might be our URLs or how we were processing them. Google has pretty specific best practices for how to size, convert andprocess inputs to their service, so maybe it was related to that.

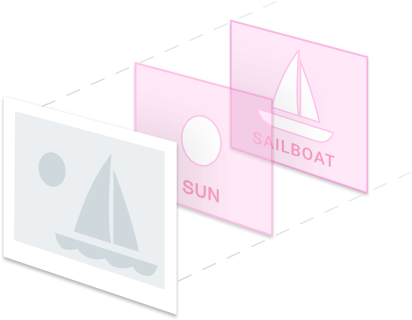

Alas, URLs continued to fail (at a rate of about 3 per 1000 after upgrading to the newer library) with four different processing methods and a control group of the unadulterated URL (the control group, random Flickr images, actually failed at a much higher rate, but their size and resolution couldn’t be guaranteed to fall within the aforementioned best practices). It seemed, in some weird way, to relate to specific image content: the actual visual features of the images we were putting in. Was there something about the angle, the lighting, the objects that confused the model?

Alas, URLs continued to fail (at a rate of about 3 per 1000 after upgrading to the newer library) with four different processing methods and a control group of the unadulterated URL (the control group, random Flickr images, actually failed at a much higher rate, but their size and resolution couldn’t be guaranteed to fall within the aforementioned best practices). It seemed, in some weird way, to relate to specific image content: the actual visual features of the images we were putting in. Was there something about the angle, the lighting, the objects that confused the model?

That was further compounded by the fact that Clarifai has been found to not fail at all in our tests, no matter the image, bare Flickr URL or one prepared by our Filestack Processing engine. While Google has of late been a behemoth of image labeling and object recognition, turning a meme into a disruptive research paper, it’s worth remembering that Clarifai has been at this for longer and was born of a first place classification prize in the ImageNet Large Scale Visual Recognition Challenge . They may lack the infrastructure to ensure consistent response times, but it’s nonetheless probable their actual classification technology is, in fact, superior to Google’s.

Solving the Bug: Leveraging Clarifai Against Competitors

Which led us to our solution: simply add Clarifai as a backup for when Google Vision fails. Not only does this mean we should see our error-rate come down to zero or close to it, we now also have the ability to compare Vision and Clarifai on real user input, over a long period of time. It opens up the possibility to do a comprehensive analysis of the two services as they pertain to real-time use-case scenarios. These being still infant services, with unknown adoption rates within the industry, access to high-quality data on their value and performance is lacking. Hopefully this is something Filestack can help to change, to ensure our customers and their users get the absolute best performance and accuracy for their image labeling needs.

Filestack is a dynamic team dedicated to revolutionizing file uploads and management for web and mobile applications. Our user-friendly API seamlessly integrates with major cloud services, offering developers a reliable and efficient file handling experience.

Read More →