Prior to integrating image tagging into our our API, here at Filestack we evaluated four of the most popular image tagging services. To determine which service to use, we looked at features, pricing, image size limits, rate limits, performance and accuracy. Ultimately, we decided to go with Google Vision, but the other services might be a good fit for your project.

Background

One of the things Filestack prides ourselves on is providing the world’s top file handling service for developers, and in effect, building the files API for the web. Whether you want to integrate our uploader widget with a few lines of code or you want to build a custom uploading system on top of our APIs, we want to provide you a rock solid platform coupled with an excellent experience.

Over time our customers have progressively asked for more detailed data about their files and uploads. This led to image tagging being one of the most requested features by customers. In past years, more than 250,000,000 files were uploaded through Filestack, with images accounting for more than 85% of those uploads. With photos dominating uploads, exchangeable image file format (exif) data extraction and automated object recognition (image tagging) are becoming dominant figures in data analytics.

Exif data is not new to Filestack, customers today can query an uploaded image’s metadata to see the exif payload. Much like the decision we made to partner with a CDN, the Filestack engineering team had a decision to make: do we build an image tagging service, or do we look for a best of breed partner to go to market with. This decision was a little more complicated because we already had a facial detection engine, so there is some scaffolding of a product, whereas content delivery was something we had no software investment in.

The AI landscape has taken off, and with it we see image recognition systems like Clarifai and Imagga, as well as large technology incumbents such as Microsoft, Google, and Amazon. It feels like there is a newcomer every few days, and competition is good! This is going to produce better platforms, happier customers, and more creative uses of this technology. In the interest of time, we decided to pick a handful of players in this space and put them through the paces. Speed and accuracy were the two categories we prioritized; there is an almost endless amount of success criteria one could place on this, so we tried to keep it simple.

Comparing 4 popular object recognition APIs

The four platforms we put to the test were:

Google Cloud Vision

Based on the Tensorflow open-source framework that also powers Google Photos, Google launched the Cloud Vision API (beta) in February 2016. It includes multiple functions, including optical character recognition (OCR), as well as face, emotion, logo, inappropriate content and object detection.

Microsoft Cognitive Services

Formerly known as Project Oxford, Microsoft Cognitive Services encompasses 22 API’s that include a wide variety of detection API’s such as dominant color, face, emotion, celebrity, image type and not-safe-for-work content (NSFW). For the purposes of our object recognition testing, we focused on the Computer Vision API (preview), which employs the 86-category concept for tagging.

Amazon Rekognition

Amazon Rekognition is an image recognition service that was propelled by the quiet Orbeus acquisition back in 2015. Rekognition is focused on object, facial, and emotion detection. One major difference from the other services tested was the absence of NSFW content detection. Moving forward, Amazon has thrown their full support behind MXNet as their deep-learning framework of choice.

Clarifai

Founded in 2013, and winner of the Imagenet 2013 Classification Challenge, Clarafi is one of the hottest startups in the AI space raising over 40 million in funding. Led by Machine Learning / Computer Vision Guru Matthew Zeiller, Clarifai is making a name for themselves by combining core models with additional machine learning models to tag in areas like “general,” “NSFW,” “weddings,” “travel,” and “food.”

High-level feature platform overview

Feature Comparison

| Amazon | Clarifai | Microsoft | ||

| Image Tagging | Yes | Yes | Yes | Yes |

| Video Tagging | Yes | Yes | Yes | Yes |

| Emotions detection | Yes | Yes | Yes | Yes |

| Logo detection | Yes | Yes | Yes | Yes |

| NSFW tagging | Yes | Yes | Yes | Yes |

| Dominant color | Yes | Yes | Yes | Yes |

| Feedback API | No | Yes | Yes | No |

Image Size Limits

| Amazon Rekognition | 5Mb / Image, 15Mb / Image from S3 |

| Google Vision API | 20 MB / Image |

| Clarifai | No data in documentation |

| Microsoft Computer Vision API | 4 MB / Image |

Rate limits

| Amazon Rekognition | Not defined in documentation |

| Google Vision API | Varies by plan |

| Clarifai | Varies by plan (we get 30 rps for testing purposes) |

| Microsoft Computer Vision API | 10 Requests per second |

Now that we’ve set the stage, we were ready to test for the two characteristics we cared about the most: performance and accuracy.

Performance Testing

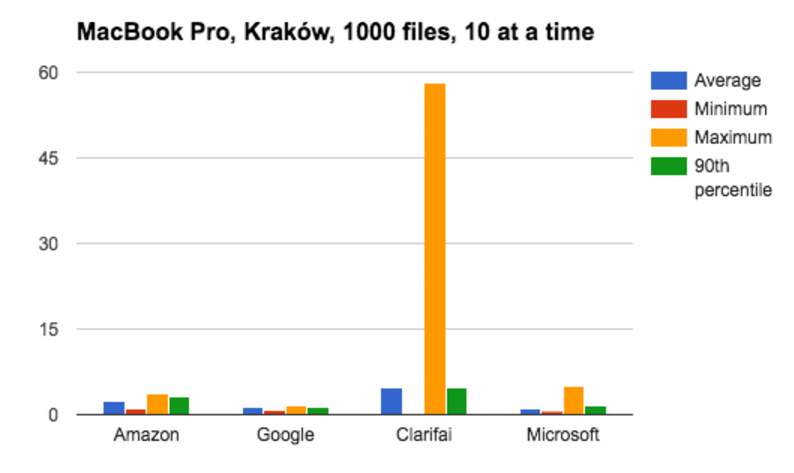

MacBook Pro, Kraków, 1000 files, 10 at a time

| Average | Minimum | Maximum | 90th percentile | |

| Amazon | 2.42s | 1.03s | 3.73s | 3.21s |

| 1.23s | 0.69s | 1.68s | 1.42s | |

| Clarifai | 4.69s | 0.1s | 58.16s | 4.78s |

| Microsoft | 1.11s | 0.65s | 5.07s | 1.5s |

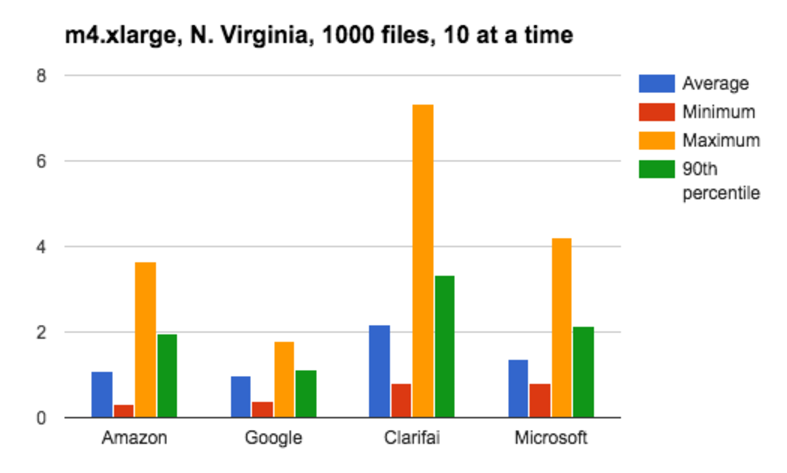

N. Virginia, 1000 files, 10 at a time

| Average | Minimum | Maximum | 90th percentile | |

| Amazon | 1.1s | 0.302s | 3.64s | 1.97s |

| 0.98s | 0.4s | 1.79s | 1.12s | |

| Clarifai | 2.17s | 0.81s | 7.35s | 3.34s |

| Microsoft | 1.38s | 0.81s | 4.22s | 2.14s |

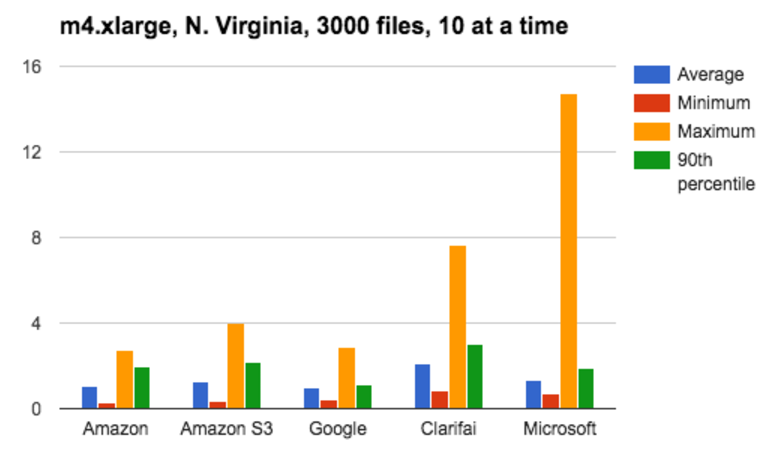

N. Virginia, 3000 files, 10 at a time

| Average | Minimum | Maximum | 90th percentile | |

| Amazon | 1.08 | 0.25 | 2.71 | 1.96 |

| Amazon S3 | 1.26 | 0.35 | 4.02 | 2.17 |

| 0.97 | 0.41 | 2.87 | 1.11 | |

| Clarifai | 2.08 | 0.84 | 7.63 | 3.05 |

| Microsoft | 1.31 | 0.73 | 14.74 | 1.87 |

What did we learn?

- Google Vision API provided us with the most steady and predictable performance during our tests, but it does not allow injection with URL’s. In order to use it, we had to send the entire file, or we could alternatively use Google Cloud Storage to save on bandwidth costs.

- Microsoft showed reasonable performance with some higher times on high load.

- Amazon Rekognition supports injection directly from S3 but there was no major improvement in performance. Google was faster in processing even if an image came from a server located in AWS’s infrastructure. Using S3 links could potentially save outgoing bandwidth costs, and using S3 allows us to use much larger files (15Mb).

- Clarifai was the slowest provider but was flexible enough to increase our rate limits to 30 requests per second. It was not clear that more tagging options linearly scaled with time to ingest and tag images.

- Filestack POV: Google’s investment in their network infrastructure once again proved to be a big winner. Even with the cost of bandwidth hitting all-time lows, we have to be mindful of the egress cost of processing millions of files across multiple cloud storage providers and social media sites.

Object recognition testing

Google Maps screenshot

| Amazon | Diagram (92%), Plan (92%), Atlas (60%), Map (60%) |

| Map (92%), Plan (60%) | |

| Clarifai | Map (99%), Cartography (99%), Graph (99%), Guidance (99%), Ball-Shaped (99%), Location (98%), Geography (98%), Topography (97%), Travel (97%), Atlas (96%), Road (96%), Trip (95%), City (95%), Country (94%), Universe (93%), Symbol (93%), Navigation (92%), Illustration (91%), Diagram (91%), Spherical (90%) |

| Microsoft | Text (99%), Map (99%) |

To start we decided to use something we thought was pretty simple, a screenshot of Google Maps. All services performed pretty well here; but Microsoft was the odd man out with a 99% confidence that this was “Text.”

Fruit cup

| Amazon | Fruit (96%), Dessert (95%), Food (95%), Alcohol (51%), Beverage (51%), Coctail (51%), Drink (51%), Cream (51%), Creme (51%) |

| Food (95%), Dessert (84%), Plant (81%), Produce (77%), Frutti Di Bosco (77%), Fruit (72%), Breakfast (71%), Pavlova (71%), Meal (66%), Gelatin Dessert (57%) | |

| Clarifai | Fruit (99%), No Person (99%), Strawberry (99%), Delicious (99%), Sweet (98%), Juicy (98%), Food (97%), Health (97%), Breakfast (97%), Sugar (97%), Berry (96%), Nutrition (96%), Summer (95%), Vitamin (94%), Kiwi (93%), Tropical (92%), Juice (92%), Refreshment (92%), Leaf (90%), Ingredients (90%) |

| Microsoft | Food (97%), Cup (90%), Indoor (89%), Fruit (88%), Plate (87%), Dessert (38%), Fresh (16%) |

Keeping things relatively simple, we ran a test with a fruit dessert cup. Google surprising came in last in confidence as “fruit” as a category. Clarifai not only hit fruit, but with their wide range of categorization options, we got plenty of results around “health” tags.

Assorted peppers

| Amazon | Bell Pepper (97%), Pepper (97%), Produce (97%), Vegetable (97%), Market (84%), Food (52%) |

| Malagueta Pepper (96%), Food (96%), Pepperoncini (92%), Chili Pepper (91%), Vegetable (91%), Produce (90%), Cayenne pepper (88%), Plant (87%), Bird’s Eye Chili (87%), Pimiento (81%) | |

| Clarifai | Pepper (99%), Chili (99%), Vegetable (98%), Food (98%), Cooking (98%), No Person (97%), Spice (97%), Capsicum (97%), Bell (97%), Hot (97%), Market (96%), Pimento (96%), Healthy (95%), Ingredients (95%), Jalapeno (95%), Cayenne (95%), Health (94%), Farming (93%), Nutrition (93%), Grow (90%) |

| Microsoft | Pepper (97%), Hot Pepper (87%), Vegetable (84%) |

We spiced things up this round by presenting a photo full of various peppers. All providers performed pretty well here, especially Google and Clarifai with the most accurate pepper tags.

Herman the Dog

| Amazon | Animal (92%), Canine (92%), Dog (92%), Golden Retriever (92%), Mammal (92%), Pet (92%), Collie (51%) |

| Dog (98%), Mammal (93%), Vertebrate (92%), Dog Breed (90%), Nose (81%), Dog Like Mammal (78%), Golden Retriever (77%), Retriever (65%), Collie (56%), Puppy (51%) | |

| Clarifai | Dog (99%), Mammal (99%), Canine (98%), Pet (98%), Animal (98%), Portrait (98%), Cute (98%), Fur (96%), Puppy (95%), No person (92%), Retriever (91%), One (91%), Eye (90%), Looking (89%), Adorable (89%), Golden Retriever (88%), Little (87%), Nose (86%), Breed (86%), Tongue (86%) |

| Microsoft | Dog (99%), Floor (91%), Animal (90%), Indoor (90%), Brown (88%), Mammal (71%), Tan (27%), Starting (18%) |

Next up was a stock photo of a black dog, let’s call him Herman. All services fared well, most interesting to see here is Amazon, Google, and Clarifai all tag with “Golden Retriever.” I’m not confident that Herman is a golden retriever, but three out of four services said otherwise.

Flipped Herman the dog

| Amazon | Animal (98%), Canine (98%), Dog (98%), Mammal (98%), Pet (98%), Pug (98%) |

| Dog (97%), Mammal (92%), Vertebrate (90%), Dog Like Mammal (70%) | |

| Clarifai | Dog (99%), Mammal (97%), No Person (96%), Pavement (96%), Pet (95%), Canine (94%), Portrait (94%), One (93%), Animal (93%), Street (93%), Cute (93%), Sit (91%), Outdoors (89%), Walk (88%), Sitting (87%), Puppy (87%), Looking (87%), Domestic (87%), Guard (86%), Little (86%) |

| Microsoft | Ground (99%), Floor (90%), Sidewalk (86%), Black (79%), Domestic Cat (63%), Tile (55%), Mammal (53%), Tiled (45%), Dog (42%), Cat (17%) |

Going back to our adorable stock photo of Herman, we flipped the image and tossed it back into the mixer. Herman adequately gave Microsoft some heartburn, as “Dog” fell to 42%, and it even gave a 17% certainty of Herman being a “Cat.”

Zoomed in Herman

| Amazon | Animal (89%), Canine (89%), Dog (89%), Labrador Retriever (89%), Mammal (89%), Pet (89%) |

| Dog (96%), Mammal (92%), Vertebrate (90%), Dog Like Mammal (69%) | |

| Clarifai | Animal (99%), Mammal (98%), Nature (97%), Wildlife (97%), Wild (96%), Cute (96%), Fur (95%), No Person (95%), Looking (93%), Portrait (92%), Grey (92%), Dog(91%), Young (89%), Hair (88%), Face (87%), Chordata (87%), Little (86%), One (86%), Water (85%), Desktop (85%) |

| Microsoft | Dog (99%), Animal (98%), Ground (97%), Black (97%), Mammal (97%), Looking (86%), Standing (86%), Staring (16%) |

Ending our round of Herman based tests, we presented a zoomed image to each of the platforms. Kudos to Amazon for “Labrador Retriever” as that is what breed Herman is. Everything else here is pretty standard.

Telephone Logo

|

Amazon |

Emblem (51%), Logo (51%) |

| Text (92%), Font (86%), Circle (64%), Trademark (63%), Brand (59%), Number (53%) | |

| Clarifai | Business (96%), Round (94%), No Person (94%), Abstract (94%), Symbol (92%), Internet (90%), Technology (90%), Round out (89%), Desktop (88%), Illustration (87%), Arrow (86%), Conceptual (85%), Reflection (84%), Guidance (83%), Shape (82%), Focus (82%), Design (81%), Sign (81%), Number (81%), Glazed (80%) |

| Microsoft | Bicycle (99%), Metal (89%), Sign (68%), Close (65%), Orange (50%), Round (27%), Bicycle Rack (15%) |

In an effort to stretch the image categories, decided we’d throw a logo into our testing and see what was reported. Kudos to Amazon for reporting “Logo”, as that is what we were expecting, and no one else hit. Microsoft leading with a 99% certainty this is a “Bicycle” was the first big miss we found.

Uncle Sam logo

| Amazon | Clown (54%), Mime (54%), Performer (54%), Person (54%), Costume (52%), People (51%) |

| Figurine (55%), Costume Accessory (51%) | |

| Clarifai | Lid (99%), Desktop (98%), Man (97%), Person (96%), Isolated (95%), Adult (93%), Costume (92%), Young (92%), Retro (91%), Culture (91%), Style (91%), Boss (90%), Traditional (90%), Authority (89%), Party (89%), Crown (89%), Funny (87%), People (87%), Celebration (86%), Fun (86%) |

| Microsoft | No data returned |

The only test we ran that stumped one of our contestants, a logo of Uncle Sam was not detected at all by Microsoft. The other three service were varied in their responses, but none of the them were correctly able to identify the logo.

What did we learn?

- Google Vision was quite accurate and detailed. There was no major misses, the biggest being the telephone logo picture, but it did pick up “brand.”

- Microsoft took a bit of a beating, as it did not perform well on flipped Herman, or the telephone logo. The “Uncle Sam” logo also returned no data.

- Amazon Rekognition was pretty reliable and did not have any major surprises. As far a feature maturity goes, this is one of the newest entrants to the market. Given Amazon Web Service’s track record, we expect this service to grow very fast and new features to be added at a blistering pace.

- Clarifai by far had the most image tags, but had a few hiccups along the way. The zoomed Herman was able to make it drop “Dog” from it’s suggested tags. More tags is not always better, as some of them were inaccurate.

- Filestack POV: Logo detection and accuracy is very difficult. We also learned that more image tagging categories does not necessarily correlate to more accurate tagging.

Winner: Google Vision

Conclusion

With speed and accuracy being our top priorities, Google’s Vision API was the winner this time around. We encourage you to pick the service that solves your needs best, as every platform has strengths and weaknesses. We will continue to test, review, and ultimately integrate with best of breed services – this is only the beginning. Our goal is to ensure we provide our customers with the best value for their dollar and help them solve complex challenges around intelligent content.

If you want to check out our image tagging implementation, reach out to us to see a demo today!

Filestack is a dynamic team dedicated to revolutionizing file uploads and management for web and mobile applications. Our user-friendly API seamlessly integrates with major cloud services, offering developers a reliable and efficient file handling experience.

Read More →