Machine learning services such as Microsoft Cognitive Services and Google Vision provide a wrapper over complex and resource-intensive algorithms, turning dense and arcane problems into simple REST requests. But these services, despite being state-of-the-art, cannot do all of the work for you (though some do more than others). And when it comes to optical character recognition (OCR), which allows you to extract text from images and documents, sometimes providing better image data is the difference between good and great results.

Google vs Microsoft OCR

These two companies are not alone in their ability to provide OCR, but they stand out for their ease-of-use and cheap pricing tiers. Though not optimized for industry-specific text extraction (such as archival quality photos, historic documents, noisy or damaged documents, and so forth), they provide excellent deep-learning-based OCR that will work for the majority of general use cases, such as images; letters and documents printed with well-known fonts; PowerPoint presentations; and other common image texts.

Key Differences Between Google and Microsoft OCR

Both return the extracted text from an image, as well as bounding boxes to tell you where each word is. Both perform well with clear text and will struggle with tiny, difficult to read or handwritten text. Some of the notable differences include:

- Microsoft returns an array of lines, as well as words, so you know which words make up which distinct blocks of text in the photo. Google returns individual words, and then additionally a single object that contains all the text found in the photo, but does not have a line-by-line breakdown.

- Microsoft automatically detects rotated text and attempts to align the photo so the text can be read horizontally (this improves results). They also return the degree at which the photo was rotated so you know how to position the given bounding boxes. Google expects well-aligned text from the user and does not preprocess in this way, so you’ll have to determine whether or not your image needs to be rotated before sending it to them.

Preprocessing Photos

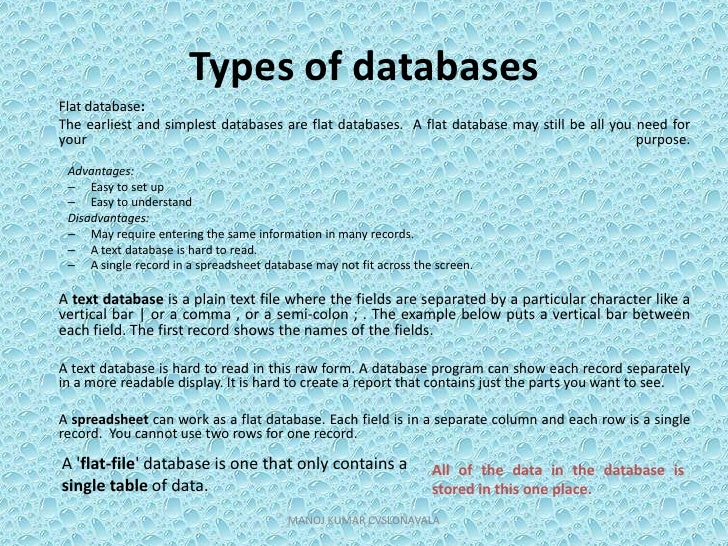

Testing Microsoft Cognitive Services against some hard-to-read text shows how important image preprocessing can be in getting acceptable results. The image above is a slide from a PowerPoint presentation, with a complex background that lacks distinct contrast from the text. Microsoft returns the following for the unaltered version:

Types of databases

Flat databaSé’.

The earliest and Finyplesi ciÅtabases pre flat dat?beses. flat may still be need for

your-

purpose:

‘”von ta geS:

Fasy to setup

Easy to understand

Disödvanta ges:

May require entering fhe Fame in!prmåtiomiri mgny

This is…not ideal. The Microsoft model appears not to know which language or character set to use. The color and background are throwing the algorithm off, and this could be a constant problem if you’re extracting text from PowerPoint presentations or similar kinds of images, which frequently have pictures or textures for backgrounds.

We can remove the background by running the image through Filestack’s black-and-white filter, with the threshold set to 55, and sending it off to Microsoft again. This time we get:

Types of databases

Flat database:

The ear!iest and simplest databases are flat databases. A flat database may still be you need for

your

Advontoges:

Easi to set up

Easy to understand

Disadvantages:

May require entering the same information in many records

Markedly improved! Although it’s still not perfect, it is much better than before. Hopefully this shows just how integral image preprocessing is when trying to use intelligent services to extract data. Remember, if you have a hard time reading it, the machine probably will, too.

Filestack is a dynamic team dedicated to revolutionizing file uploads and management for web and mobile applications. Our user-friendly API seamlessly integrates with major cloud services, offering developers a reliable and efficient file handling experience.

Read More →