Can you imagine a world where you can’t upload files in any application? No resumes submitted, no profile pictures updated, and no media shared on social platforms and blogs. As a modern user, you’ll find such a world boring, since file uploads are a fundamental part of user interaction.

Despite how common file uploads are, building an upload tool from scratch isn’t as straightforward as it seems. For instance, developers often run into challenges like handling large files, managing upload errors, validating file types, and securing storage.

To handle these problems without sacrificing quality or time, developers have started using a dedicated file uploader library. It refers to a solution that handles everything from UI and validation to security and cloud integration. In this article, you’ll learn what these libraries do, how they benefit you, and when to use one in your project.

Key Takeaways

- Modern users expect fast, secure, and reliable file upload capabilities in most applications.

- Building file upload tools from scratch is often inefficient and risky.

- A dedicated file uploader library simplifies development, saves time, and reduces bugs and vulnerabilities.

- When browsing such libraries, look for client-side validation, plugin support, strong documentation, and robust security features.

- Not every project needs one, but many can greatly benefit from a dedicated uploader library.

What Is a Dedicated File Uploader Library?

A dedicated file upload library is a third-party tool or framework that simplifies file upload in mobile or web applications. It usually provides pre-built components and backend logic that handle the complex tasks involved in uploading files. For example, it allows you to address complex issues like network error handling and storage service integration like AWS easily.

How Does It Differ from Native File Uploads?

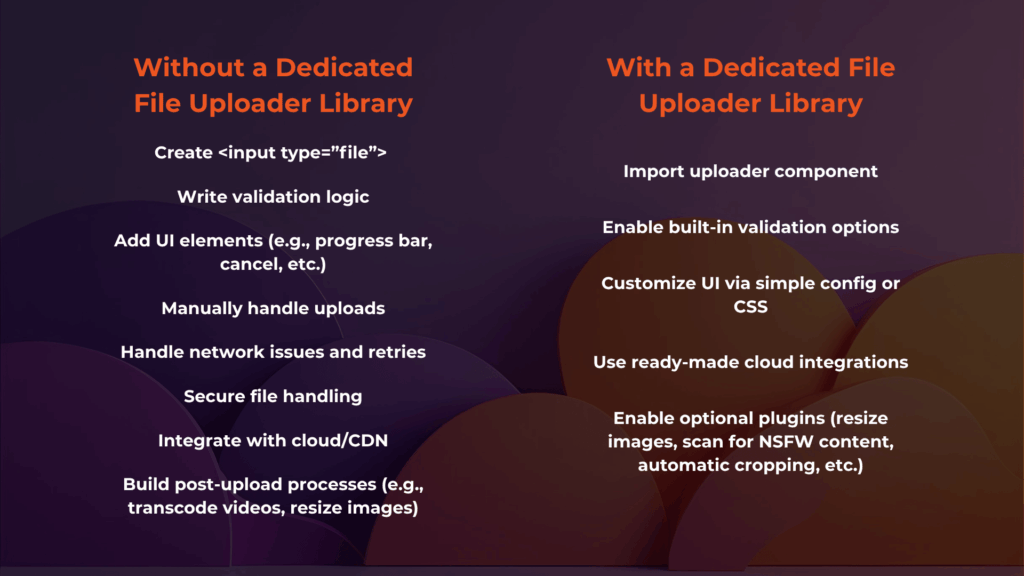

Without using a dedicated file uploader library, you would have to use native HTML, which uses the <input type=”file”> element. While easy to implement, it’s also very limited, as you must manually implement all logic like validation, previews, and storage.

If you decide to DIY it, you’ll build the logic using some JavaScript and some server-side code. However, this process is often time-consuming and error-prone. For instance, just to help protect your application, server, and users from potential threats, you must handle most of these best practices.

In contrast, a dedicated file uploader library abstracts this complexity by giving you what you need without letting you do all the work. Some popular libraries include

- Filestack: A robust option with built-in transformations, AI features (e.g., OCR, NSFW detection), virus scanning, CDN delivery, and more.

- Uppy: Modular and extensible, supports resumable uploads, and has a clean UI.

- Fine Uploader: Simple to use, offers legacy browser support, 0 dependencies, and open source.

Key Benefits of Using a Dedicated File Uploader Library

Using a dedicated file uploader library allows you to cut through the difficult parts of implementing file uploads. So, instead of focusing on how you’ll process, protect, and store uploads, you can focus on your app’s core functionality. Here are the most important benefits that a dedicated file uploader library can give you.

Simplified Development Workflow

After implementing a dedicated file uploader library, one of the most immediate benefits that you’ll notice is how much development time it saves. Most libraries offer:

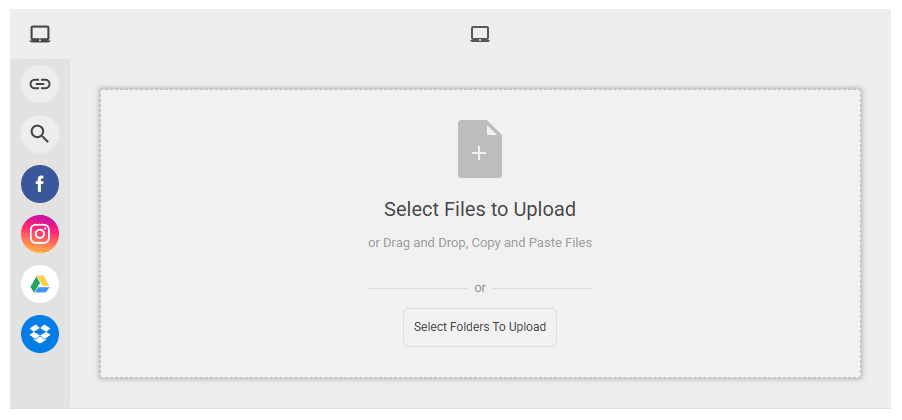

- Prebuilt UI elements like drag-and-drop uploads, upload buttons, file previews, progress bars, and so on.

- Native support for multi-file uploads, network failure handling, and CDN delivery

- API integrations that handle secure upload tokens, managing multi-part uploads, configuring upload endpoints, and other complex tasks.

Enhanced User Experience

Modern users expect seamless file uploads, especially on mobile. A good file upload library can give you:

- Real-time progress bars and clear error messages, which improve transparency.

- Resumable uploads, which let users pick up where they left off in case of network failure.

- Touch-friendly controls and responsive layouts that make uploads smooth across all devices and for all users.

These features collectively lead to a faster and more intuitive experience that helps reduce user frustration.

Improved Security and Validation

Handling file uploads without proper security is risky. For example, malicious users might upload executable files, inject scripts, or exploit weak endpoints. Most dedicated libraries help prevent this by

- Offering client-side and server-side validation (e.g., allowing specific formats like JPEG or PDF, limiting file sizes, checking file types (e.g., .exe), etc.).

- Including virus scanning hooks or integrations with antivirus APIs.

- Supporting secure token-based uploads and authenticated endpoints to prevent unauthorized access.

These built-in protections reduce possible vulnerabilities and keep your infrastructure safe. Aside from these, ensure that you’re also following other best practices (e.g., changing the file name, setting limits, and storing outside Webroot).

Scalability and Performance

As your application grows, so do your users and, more importantly, their files. Uploading an image is one thing; processing tons of HD videos per user is another. Thankfully, dedicated libraries can help your app scale properly by

- Efficiently handling large file uploads and batches using chunked uploads or streaming.

- Integrating with CDNs (content delivery networks) to deliver files faster to users, regardless of geographical location.

All these features lead to fewer timeouts, fewer failures, and faster uploads, no matter how heavy the traffic gets.

Integration with Cloud Services

Many applications now rely on third-party cloud providers for storage and processing. Solid, dedicated uploader libraries can give you

- Built-in support for cloud storage services like Amazon S3, Google Cloud Storage, and Azure Blob Storage, among others.

- Features like on-the-fly image resizing, document conversion, or video transcoding after each upload (e.g., cropping images to a certain size for profile pictures).

This kind of smooth integration removes the burden of managing file infrastructure and handling different services and file processing yourself.

Error Handling and Retry Logic

Users have little patience for failed uploads. Thankfully, good uploader libraries offer

- Automatic retry mechanisms for failed uploads due to spotty network connections.

- Better user and developer feedback, so they know what went wrong and how to fix it.

With fewer failed uploads, your users will have a better experience, and in turn, you’ll have fewer support tickets and higher user retention.

When Should You Choose a Dedicated File Uploader Library?

It’s true that a dedicated file uploader might seem overkill for a simple contact form. However, it is a great investment if your project includes:

- High file upload volumes or large file uploads (e.g., HD videos, audio, or design assets)

- Tight deadlines, where building from scratch would slow you down

- Multiple upload entry points, such as user profiles, social media posts, or messaging features

- Cloud or CDN integration, especially if you plan to scale or serve a global user base

- Rich media support, such as image cropping, watermarks, borders, filters, and so on

- Strict performance needs that require more than 99% reliability no matter the upload

Overall, teams that want to focus on core product features, work fast, and avoid reinventing the wheel tend to benefit the most. And while creating your own file uploader is definitely possible, you will usually take a longer time, especially with complex features. So, assess your requirements and weigh your options before you commit to either integration or building from scratch.

Common Features to Look for in a File Uploader Library

It’s one thing to know when to opt for a dedicated uploader library and another to know which features to look for. When choosing a library, consider the following capabilities that help you achieve better flexibility, reliability, and security.

Client-Side Validation

This allows the library to catch invalid files before they reach your server. Examples include checking file types (.exe or .bat), enforcing maximum size limits, or restricting the number of files per upload. This type of validation saves bandwidth and protects your backend.

Plugin Architecture

As your app grows, your needs will evolve. Good libraries provide extensibility and customization, allowing you to enhance functionality without rewriting your core code.

For example, you may want to upload directly to AWS S3 or compress files before uploading. Moreover, let’s say you want to transcode video files or implement custom analytics or logging. A plugin system makes these possible, and a good file uploader library should let you easily hook plugins into the upload pipeline.

Security Features

You should always prioritize security, especially in applications that allow file uploads. A robust file uploader should come with built-in controls to minimize risk. For example, a good uploader lets you sanitize harmful metadata or scripts from image or document uploads.

Token-based uploads are another essential security feature, preventing unauthorized uploads or overwrites by issuing time-limited, permission-specific URLs. Finally, you should look out for permission control features that ensure users only access files they’re authorized to access.

Developer Support

No matter how powerful a library is, it’s only as usable as its documentation and community support. Look for signs of active maintenance and healthy developer engagement. For instance, the best file uploaders come with up-to-date documentation with real examples and code snippets.

You should also find activity in GitHub, Stack Overflow, and other community forums. Furthermore, you should see regular updates (via blogs, social media posts, or a YouTube channel). Lastly, it should have clear licensing terms, possibly including dedicated support.

Together, these features create a foundation for scalable, maintainable, and secure file handling. The best libraries give you power and flexibility without overcomplicating your codebase. And simply, the best libraries give you exactly the features that you need and the level of control that you want.

Final Thoughts

A dedicated file uploader library is more than merely a convenience. By letting you implement complex upload features, secure your infrastructure, and prepare for growth in a breeze, it provides benefits that manual implementation rarely matches. So instead of writing and maintaining your own file upload code, you can rely on a proven solution.

However, as stated earlier, you should always consider your project requirements first. Will your application handle dozens, hundreds, or a lot more uploads per day? Do you need to handle security for your uploads and server(s)? Do you require integration with popular cloud storage services as well as CDN support?

If you answered yes to any of these, chances are you need to consider implementing a library purpose-built for file uploads. So try one out! Your development team will thank you, and so will your users. Ready to simplify file uploads and scale with confidence? Explore Filestack and see how effortless it can be.

A Product Marketing Manager at Filestack with four years of dedicated experience. As a true technology enthusiast, they pair marketing expertise with a deep technical background. This allows them to effectively translate complex product capabilities into clear value for a developer-focused audience.

Read More →